NVIDIA has introduced Dynamo, an open-source inference application crafted to expedite and scale reasoning models within AI production facilities.

Effectively handling and coordinating AI inference requests across a multitude of GPUs is an essential task to guarantee that AI production facilities can function with maximum cost-efficiency and enhance the generation of token revenues.

As AI reasoning gains increasing traction, each AI model is anticipated to produce tens of thousands of tokens with every input, essentially mirroring its “thought” process. Thus, improving inference capabilities while concurrently lowering its expenses is imperative for driving growth and amplifying revenue avenues for service providers.

A revolutionary generation of AI inference software

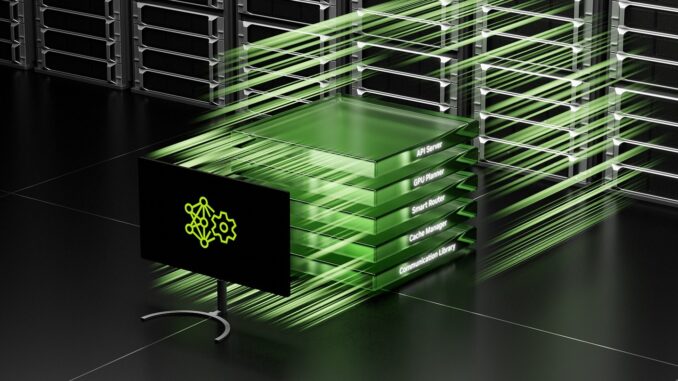

NVIDIA Dynamo, which replaces the NVIDIA Triton Inference Server, symbolizes a revolutionary generation of AI inference software specifically designed to optimise token revenue generation for AI production facilities implementing reasoning AI models.

Dynamo orchestrates and boosts inference communication across potentially thousands of GPUs. It utilises a disaggregated serving methodology, a strategy that divides the processing and generation stages of large language models (LLMs) onto different GPUs. This method permits each stage to be fine-tuned individually, addressing its specific computational requirements and ensuring maximal utilisation of GPU assets.

“Industries globally are training AI models to think and learn in various manners, making them more advanced over time,” declared Jensen Huang, founder and CEO of NVIDIA. “To foster a future of bespoke reasoning AI, NVIDIA Dynamo aids in serving these models at scale, promoting cost reductions and efficiencies throughout AI production facilities.”

With the same quantity of GPUs, Dynamo has proven capable of doubling the performance and revenue of AI production facilities utilizing Llama models on NVIDIA’s current Hopper platform. Additionally, when executing the DeepSeek-R1 model on an extensive cluster of GB200 NVL72 racks, NVIDIA Dynamo’s intelligent inference enhancements have presented an increase in the number of tokens produced by over 30 times per GPU.

To attain these advancements in inference capabilities, NVIDIA Dynamo integrates several pivotal features aimed at boosting throughput and diminishing operational expenses.

Dynamo is able to dynamically add, remove, and reallocate GPUs in real-time to adjust to variable request volumes and types. The application can also identify specific GPUs within large clusters that are optimally positioned to minimise response computations and efficiently direct queries. Moreover, Dynamo can transfer inference data to more budget-friendly memory and storage systems while retrieving it swiftly when necessary, thereby decreasing overall inference expenditures.

NVIDIA Dynamo is being launched as a fully open-source initiative, providing extensive compatibility with widely used frameworks such as PyTorch, SGLang, NVIDIA TensorRT-LLM, and vLLM. This open philosophy supports enterprises, startups, and researchers in creating and optimising innovative strategies for servicing AI models across disaggregated inference setups.

NVIDIA envisions Dynamo accelerating the adoption of AI inference among a diverse array of organisations, including prominent cloud providers and AI innovators like AWS, Cohere, CoreWeave, Dell, Fireworks, Google Cloud, Lambda, Meta, Microsoft Azure, Nebius, NetApp, OCI, Perplexity, Together AI, and VAST.

NVIDIA Dynamo: Amplifying inference and agentic AI

A significant innovation of NVIDIA Dynamo resides in its capacity to map the knowledge that inference systems retain in memory from servicing prior requests, referred to as the KV cache, across potentially thousands of GPUs.

The software then intelligently directs new inference requests to the GPUs that contain the best knowledge alignment, effectively sidestepping expensive recomputations and liberating other GPUs to manage new incoming requests. This intelligent routing system notably enhances efficiency and diminishes latency.

“To manage hundreds of millions of requests monthly, we depend on NVIDIA GPUs and inference software to deliver the performance, reliability, and scalability our business and users demand,” noted Denis Yarats, CTO of Perplexity AI.

“We are eager to leverage Dynamo, with its improved distributed serving features, to drive even greater inference-serving efficiencies and satisfy the computational needs of new AI reasoning models.”

AI platform Cohere is already preparing to utilise NVIDIA Dynamo to augment the agentic AI functionalities within its Command model series.

“Scaling advanced AI models necessitates sophisticated multi-GPU scheduling, seamless coordination, and low-latency communication libraries that transfer reasoning contexts fluidly across memory and storage,” explained Saurabh Baji, SVP of engineering at Cohere.

“We anticipate NVIDIA Dynamo will assist us in delivering an exceptional user experience to our enterprise clients.”

Support for disaggregated serving

The NVIDIA Dynamo inference platform also encompasses robust support for disaggregated serving. This advanced approach allocates the different computational phases of LLMs – including the crucial tasks of interpreting the user query and subsequently producing the most suitable response – to various GPUs within the architecture.

Disaggregated serving is particularly well-suited for reasoning models, such as the new NVIDIA Llama Nemotron model family, which utilises advanced inference methods for enhanced contextual understanding and response generation. By allowing each phase to be optimised and resourced independently, disaggregated serving enhances overall throughput and offers quicker response times to users.

Together AI, a prominent entity in the AI Acceleration Cloud sphere, is also aiming to merge its proprietary Together Inference Engine with NVIDIA Dynamo. This integration seeks to enable seamless scaling of inference workloads across numerous GPU nodes. Furthermore, it will empower Together AI to dynamically address traffic congestions that may occur at various stages of the model pipeline.

“Scaling reasoning models cost-effectively necessitates innovative advanced inference techniques, including disaggregated serving and context-aware routing,” asserted Ce Zhang, CTO of Together AI.

“The openness and modularity of NVIDIA Dynamo will facilitate the seamless integration of its components into our engine to serve more requests while optimising resource utilisation—maximising our accelerated computing investment. We’re enthusiastic about leveraging the platform’s groundbreaking capabilities to economically provide open-source reasoning models to our users.”

Four key innovations of NVIDIA Dynamo

NVIDIA has emphasised four primary innovations within Dynamo that contribute to lowering inference serving expenses and enhancing the overall user experience:

GPU Planner: A sophisticated planning engine that dynamically adds and removes GPUs based on fluctuating user demand. This ensures optimal resource distribution, averting both over- and under-provisioning of GPU capacity.

Smart Router: An intelligent, LLM-aware router that directs inference requests across extensive fleets of GPUs. Its main function is to minimise costly GPU recomputations of repeated or overlapping requests, thereby freeing up valuable GPU resources to manage new incoming requests more effectively.

Low-Latency Communication Library: An inference-optimised library crafted to support cutting-edge GPU-to-GPU communication. It abstracts the complexities of data exchange across heterogeneous devices, significantly accelerating data transfer speeds.

Memory Manager: An intelligent engine that oversees the offloading and reloading of inference data to and from lower-cost memory and storage devices. This operation is designed to be seamless, ensuring no adverse effects on the user experience.

NVIDIA Dynamo will be incorporated within NIM microservices and will be supported in an upcoming release of the company’s AI Enterprise software platform.

See also: LG EXAONE Deep is a maths, science, and coding expert

Want to discover more about AI and big data from industry leaders? Explore AI & Big Data Expo occurring in Amsterdam, California, and London. The extensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Discover additional upcoming enterprise technology events and webinars powered by TechForge here.

Be the first to comment