Subscribe to our daily and weekly newsletters for the latest insights and exclusive material on premier AI coverage. Discover More

A recent study by scholars from Google Research and the University of California, Berkeley, illustrates that a surprisingly straightforward test-time scaling method can enhance the reasoning skills of large language models (LLMs). The crux? Augmenting sampling-based search, a strategy that depends on generating several replies and utilizing the model itself for validation.

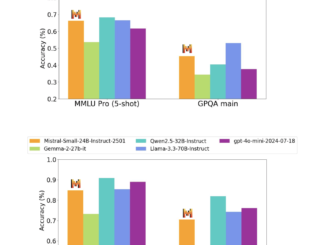

The main discovery is that even a basic execution of sampling-based search, employing random sampling and self-validation, can improve the reasoning capabilities of models like Gemini 1.5 Pro beyond those of o1-Preview on widely-used benchmarks. The implications of these findings may be significant for business applications and challenge the notion that extensively specialized training or complicated structures are always essential for attaining peak performance.

The boundaries of current test-time computation scaling

The currently favored technique for test-time scaling in LLMs involves training the model via reinforcement learning to generate extended responses with chain-of-thought (CoT) patterns. This method is utilized in models such as OpenAI o1 and DeepSeek-R1. While advantageous, these techniques often necessitate considerable investment during the training phase.

Another test-time scaling technique is “self-consistency,” where the model produces several responses to a query and selects the answer that appears most frequently. Self-consistency reaches its limitations when faced with multifaceted challenges, as in these instances, the most frequently occurring answer is not always the correct one.

Sampling-based search presents a more straightforward and scalable substitute for test-time scaling: Allow the model to create multiple responses and choose the optimal one through a validation process. Sampling-based search can enhance other test-time computation scaling methods and, as the researchers emphasize in their study, “it also possesses the unique benefit of being embarrassingly parallel and allows for arbitrary scaling: simply sample a greater number of responses.”

Most importantly, sampling-based search can be utilized with any LLM, even those that have not been specifically trained for reasoning tasks.

How sampling-based search operates

The researchers concentrate on a minimalistic execution of sampling-based search, employing a language model to both produce candidate responses and authenticate them. This process is a “self-validation” procedure, where the model evaluates its own outputs without the need for external ground-truth responses or symbolic validation systems.

The algorithm functions in several straightforward steps:

1—The algorithm starts by generating a collection of candidate solutions to the specified problem using a language model. This is executed by providing the model with the identical prompt multiple times and applying a non-zero temperature setting to produce a varied array of responses.

2—Each candidate’s response goes through a validation process in which the LLM is prompted multiple times to ascertain the correctness of the response. The verification results are subsequently averaged to create a final validation score for the response.

3—The algorithm identifies the highest-scored response as the final answer. If several candidates are closely rated, the LLM is asked to compare them pairwise and select the superior one. The response that wins the most pairwise evaluations is chosen as the final answer.

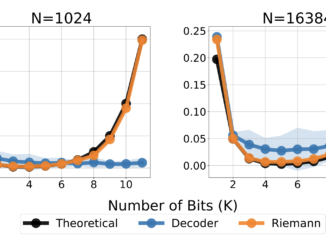

The researchers contemplated two significant aspects for test-time scaling:

Sampling: The quantity of responses produced by the model for each input challenge.

Verification: The number of validation scores calculated for each generated solution

How sampling-based search compares to alternative methods

The study revealed that reasoning performance consistently improves with sampling-based search, even as test-time computation is scaled considerably beyond the point at which self-consistency reaches saturation.

With sufficient scaling, this minimalistic implementation greatly enhances reasoning accuracy on benchmarks like AIME and MATH. For instance, Gemini 1.5 Pro outperformed o1-Preview, which has been specifically trained on reasoning tasks, and Gemini 1.5 Flash outshone Gemini 1.5 Pro.

“This not only emphasizes the significance of sampling-based search for scalability but also suggests its utility as a straightforward baseline for comparing other test-time computation scaling techniques and measuring actual enhancements in models’ search capabilities,” the researchers note.

It is essential to acknowledge that while the outcomes of search-based sampling are remarkable, the expenses can also become significant. For instance, with 200 samples and 50 verification steps for each sample, a query from AIME will yield approximately 130 million tokens, which amounts to $650 with Gemini 1.5 Pro. Nonetheless, this represents a very basic approach to sampling-based search and is amenable to optimization strategies.proposed in alternative research. By employing more sophisticated sampling and validation techniques, the costs of inference can be significantly diminished through the utilization of smaller models and by generating fewer tokens. For instance, utilizing Gemini 1.5 Flash for validation reduces expenses to $12 per inquiry.

Efficient self-verification methodologies

There is an active discussion regarding the capability of LLMs to authenticate their own outputs. The researchers pinpointed two primary approaches for enhancing self-verification during the time of testing:

Directly contrasting response candidates: Conflicts among candidate answers strongly suggest possible inaccuracies. By supplying the evaluator with several responses for comparison, the model can more effectively detect errors and fabrications, addressing a fundamental flaw in LLMs. The researchers characterize this as a case of “implicit scaling.”

Task-oriented rewriting: The researchers advocate that the ideal output format of an LLM is contingent upon the task at hand. Chain-of-thought is advantageous for tackling reasoning challenges, but replies become more straightforward to validate when articulated in a more formal, mathematically standard style. Evaluators can revise candidate responses into a more organized structure (e.g., theorem-lemma-proof) prior to assessment.

“We foresee that self-verification capabilities of models will swiftly evolve in the near future, as models learn to capitalize on the concepts of implicit scaling and appropriateness of output style, enhancing scaling rates for sampling-based searches,” the researchers assert.

Consequences for practical applications

The research reveals that a relatively straightforward approach can yield remarkable outcomes, potentially minimizing the necessity for intricate and expensive model configurations or training methodologies.

This technique also allows for scalability, enabling organizations to enhance performance by dedicating additional computational resources to sampling and validation. It empowers developers to push cutting-edge language models beyond their limits regarding intricate tasks.

“Since it complements other test-time computation scaling approaches, is parallelizable and permits arbitrary scaling, and allows for straightforward implementations that are evidently effective, we predict that sampling-based search will play an essential role as language models are assigned the task of solving increasingly complex challenges with expanding computational budgets,” the researchers note.

Be the first to comment