Google’s latest AlphaEvolve illustrates the transition of an AI agent

Subscribe to our daily and weekly newsletters for the most recent updates and exclusive material on leading AI developments. Learn More

Google’s latest AlphaEvolve illustrates the transition of an AI agent from laboratory demonstration to real-world application, backed by one of the industry’s most skilled technology firms.

Developed by Google’s DeepMind, this system autonomously modifies essential code and has already justified its existence within Google. It broke a 56-year-old record in matrix multiplication (fundamental to numerous machine learning tasks) and recaptured 0.7% of computing capacity throughout the company’s worldwide data centers.

While these outstanding achievements are significant, the more profound lesson for enterprise technology executives lies in how AlphaEvolve accomplishes these tasks. Its framework – comprising a controller, rapid-draft models, profound reasoning models, automated evaluators, and versioned memory – exemplifies the type of production-level infrastructure that ensures autonomous agents can be implemented safely at scale.

Google’s AI capabilities are arguably unmatched. The challenge is determining how to learn from it or even utilize it directly. Google has indicated that an Early Access Program is forthcoming for academic collaborators, with “wider availability” under consideration, but specifics remain scarce. In the meantime, AlphaEvolve serves as a model of best practices: If you aim for agents that engage with high-value tasks, you’ll require similar orchestration, testing, and safeguards.

Take into account the data center success. Google won’t disclose the value of the reclaimed 0.7%, yet its annual capital expenditures total tens of billions of dollars. Even a rough approximation suggests savings in the hundreds of millions each year—ample, as independent developer Sam Witteveen pointed out in our recent podcast, to fund the training of one of the leading Gemini models, projected to surpass $191 million for a version like Gemini Ultra.

VentureBeat was the first to disclose the AlphaEvolve news earlier this week. Now, we will delve deeper: how the system functions, the actual engineering standards, and the tangible steps organizations can take to create (or acquire) something similar.

1. Beyond basic scripts: The emergence of the “agent operating system”

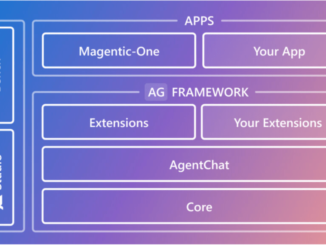

AlphaEvolve operates on what can be termed an agent operating system – a distributed, asynchronous pipeline designed for continuous enhancement on a large scale. Its foundational components include a controller, a duo of large language models (Gemini Flash for breadth; Gemini Pro for depth), a version-controlled program-memory database, and a team of evaluator workers, all calibrated for high throughput rather than merely low latency.

This design isn’t fundamentally novel, but its execution is. “It’s just an unbelievably effective execution,” Witteveen remarks.

The AlphaEvolve documentation depicts the orchestrator as an “evolutionary algorithm that progressively generates programs aimed at enhancing the score on the automated evaluation metrics” (p. 3); in essence, an “autonomous pipeline of LLMs tasked with refining an algorithm through direct modifications to the code” (p. 1).

Key takeaway for organizations: If your agent strategy involves unsupervised operations on high-value activities, prepare for analogous infrastructure: job queues, a versioned memory repository, service-mesh tracing, and secure sandboxing for any code produced by the agent.

2. The evaluator engine: propelling advancement with automated, objective insights

A vital component of AlphaEvolve is its stringent evaluation framework. Every iteration suggested by the duo of LLMs is accepted or denied based on a user-defined “evaluate” function that yields machine-readable metrics. This evaluation mechanism initiates with rapid unit-test checks on each proposed code alteration – straightforward, automatic tests (comparable to the unit tests developers typically write) that confirm the snippet still compiles and delivers the correct outputs on a few micro-inputs – before advancing the successful candidates to more demanding benchmarks and LLM-generated evaluations. This process runs concurrently, maintaining speed and safety.

In summary: Allow the models to propose solutions, then validate each one against trusted tests. AlphaEvolve also facilitates multi-objective optimization (enhancing latency and accuracy simultaneously), developing programs that achieve several metrics at once. Surprisingly, balancing multiple objectives can benefit a single target metric by fostering more diverse solutions.

Key takeaway for organizations: Production agents necessitate reliable scorekeepers. Whether this involves unit tests, comprehensive simulators, or canary traffic analysis. Automated evaluators serve as both your safety net and your growth driver. Before initiating an agentic initiative, ask: “Do we have a metric the agent can assess itself against?”

3. Intelligent model application, iterative code enhancement

AlphaEvolve approaches each coding challenge with a two-model sequence. Initially, Gemini Flash generates rapid drafts, providing the system with a broad array of concepts to explore. Following that, Gemini Pro examines those drafts in greater detail and returns a more concise set of improved candidates. Supporting both models is a lightweight “prompt builder,” a helper script that compiles the question each model addresses. It combines three types of context: prior coding attempts stored in a project database, any established guardrails or rules set by the engineering team, and pertinent external information such as research papers or developer documentation. This enriched backdrop allows Gemini Flash to explore broadly while Gemini Pro hones in on quality.

Unlike many agent demonstrations that adjust one function at a time, AlphaEvolve modifies entire repositories. It presents each alteration as a standard diff block – the same patch format engineers utilize for GitHub – enabling it to modify numerous files while keeping track. Following that, automated tests determine if the patch is accepted. Across repeated iterations, the agent’s memory of successes and failures expands, allowing it to propose higher-quality patches and reduce wasted compute on unproductive paths.

Key takeaway for organizations: Utilize less expensive, faster models for ideation, and then engage a more advanced model to refine the most promising concepts. Maintain a searchable record of every trial, as this memory accelerates future endeavors and can be leveraged across teams. Consequently, providers are eager to equip developers with innovative tools surrounding memory. Products like OpenMemory MCP, which offers a portable memory store, alongside newly developed long- and short-term memory APIs.“`html

In LlamaIndex are facilitating this form of lasting context nearly as effortlessly as logging.

OpenAI’s Codex-1 software-engineering agent, also introduced today, emphasizes the same trend. It launches concurrent tasks within a secure environment, conducts unit tests, and generates pull-request drafts—essentially a code-centric reflection of AlphaEvolve’s expansive search-and-evaluate cycle.

4. Measure to manage: targeting agentic AI for tangible ROI

AlphaEvolve’s concrete achievements – reclaiming 0.7% of data center capacity, reducing Gemini training kernel runtime by 23%, accelerating FlashAttention by 32%, and streamlining TPU design – share a common characteristic: they focus on sectors with precise metrics.

For data center scheduling, AlphaEvolve developed a heuristic evaluated using a simulator of Google’s data infrastructures grounded in historical workloads. For kernel optimization, the aim was to reduce actual runtime on TPU accelerators across a dataset of realistic kernel input dimensions.

Key takeaway for organizations: When embarking on your agentic AI journey, prioritize workflows where “improvement” is a measurable figure your system can assess—whether it’s latency, cost, error rate, or throughput. This emphasis enables automated searches and mitigates deployment risks because the agent’s output (often human-readable code, as in AlphaEvolve’s case) can be assimilated into existing review and validation processes.

This transparency enables the agent to enhance itself and showcase indisputable value.

5. Laying the foundation: crucial prerequisites for enterprise agentic success

While AlphaEvolve’s accomplishments are motivating, Google’s document is also explicit about its boundaries and requirements.

The main constraint is the necessity for an automated evaluator; challenges that need manual testing or “wet-lab” feedback are presently beyond the limits of this particular methodology. The system can demand considerable computing resources—“on the order of 100 compute-hours to evaluate any new solution” (AlphaEvolve paper, page 8)—which necessitates parallel processing and meticulous capacity management.

Before committing a significant budget to intricate agentic systems, technical leaders must pose essential inquiries:

Machine-gradable issue? Do we possess a clear, automatable metric against which the agent can evaluate its own effectiveness?

Computational capacity? Can we support the potentially computation-intensive inner cycle of generation, evaluation, and refinement, particularly during development and training?

Codebase & memory preparedness? Is your codebase organized for iterative, possibly difference-based, alterations? And are you able to implement the instrumented memory systems crucial for an agent to learn from its developmental history?

Takeaway for enterprises: The growing emphasis on robust agent identity and access management, as illustrated by platforms like Frontegg, Auth0, and others, also signals the evolving infrastructure needed to deploy agents that securely interact with various enterprise systems.

The agentic future is constructed, not merely conjured

AlphaEvolve’s communication for enterprise teams is multifaceted. Firstly, your framework surrounding agents is now considerably more vital than model intelligence. Google’s outline presents three foundational elements that should not be overlooked:

Deterministic evaluators that provide the agent with a clear score each time it enacts a modification.

Long-running orchestration that can balance rapid “draft” models like Gemini Flash with slower, more stringent models—whether that’s Google’s suite or a framework such as LangChain’s LangGraph.

Enduring memory so that each iteration expands upon the previous one rather than relearning from the ground up.

Organizations that already possess logging, testing frameworks, and version-controlled code libraries are closer than they realize. The subsequent step is to integrate those assets into a self-service evaluation loop so multiple agent-generated solutions can compete, ensuring only the highest-scoring patch is deployed.

As Cisco’s Anurag Dhingra, SVP and GM of Enterprise Connectivity and Collaboration, remarked to VentureBeat in an interview this week: “It’s happening, it is very, very real,” he stated about enterprises utilizing AI agents in manufacturing, warehouses, and customer service centers. “It is not a future prospect. It is occurring right now.” He cautioned that as these agents increasingly take on “human-like tasks,” the pressure on existing systems will be substantial: “The network traffic is going to surge,” Dhingra said. Your network, budget, and competitive advantage will likely experience that pressure before the hype cycle calms. Begin validating a contained, metric-driven use case this quarter—then expand on successful strategies.

Watch the video podcast I conducted with developer Sam Witteveen, where we delve deeply into production-grade agents and how AlphaEvolve is paving the way:

“`

Be the first to comment