Within the domain of artificial intelligence, two ongoing obstacles persist. Numerous sophisticated language models necessitate considerable computational capabilities, which restricts their application by smaller entities and independent developers. Moreover, even when these models are accessible, their latency and bulk frequently render them impractical for deployment on everyday gadgets like laptops or smartphones. There is also a continuous requirement to guarantee these models function securely, with appropriate risk evaluations and embedded safeguards. These issues have spurred the pursuit of models that are both effective and widely attainable without sacrificing performance or security.

Google AI Unveils Gemma 3: A Suite of Open Models

Google DeepMind has launched Gemma 3—a series of open models crafted to tackle these dilemmas. Engineered with technology akin to that utilized for Gemini 2.0, Gemma 3 is meant to function efficiently on a solitary GPU or TPU. The models come in various dimensions—1B, 4B, 12B, and 27B—offering choices for both pre‑trained and instruction‑tuned editions. This variety enables users to pick the model that aligns best with their hardware and specific application requirements, facilitating a broader community in integrating AI into their initiatives.

Technical Advancements and Significant Advantages

Gemma 3 has been designed to provide tangible benefits in multiple essential areas:

Efficiency and Portability: The models are crafted to perform swiftly on modest hardware. For instance, the 27B variant has shown strong performance in assessments while still being capable of operating on a single GPU.

Multimodal and Multilingual Functions: The 4B, 12B, and 27B models can process both textual and visual information, enabling applications that can interpret visual data alongside language. Furthermore, these models support over 140 languages, making them advantageous for catering to diverse global audiences.

Augmented Context Window: With a context window of 128,000 tokens (and 32,000 tokens for the 1B model), Gemma 3 is well-equipped for tasks demanding the analysis of substantial amounts of information, such as summarizing extensive documents or managing prolonged conversations.

Innovative Training Approaches: The training methodology incorporates reinforcement learning from human feedback and other post‑training techniques that aid in aligning the model’s responses with user expectations while upholding safety.

Hardware Compatibility: Gemma 3 is optimized not only for NVIDIA GPUs but also for Google Cloud TPUs, enhancing its adaptability across various computing settings. This compatibility aids in minimizing the costs and complexities of deploying advanced AI solutions.

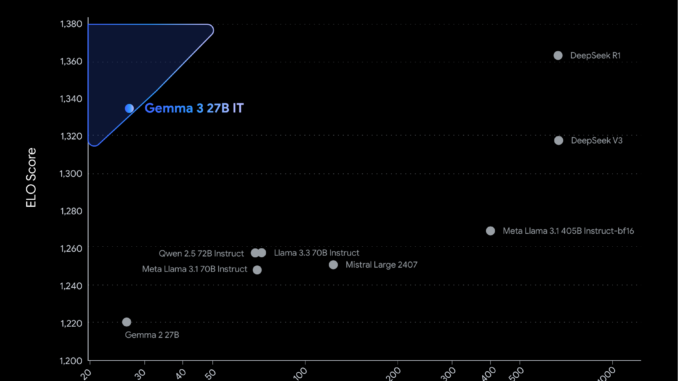

Performance Insights and Assessments

Initial assessments of Gemma 3 demonstrate that the models function reliably within their size category. In a specific set of evaluations, the 27B variant garnered a score of 1338 on a pertinent leaderboard, highlighting its ability to provide consistent and top-notch responses without necessitating substantial hardware resources. Benchmarks also indicate that the models are proficient in handling both textual and visual information, aided by a vision encoder that handles high-resolution images through an adaptive strategy.

The training of these models involved a vast and diverse dataset of texts and images—up to 14 trillion tokens for the largest variant. This extensive training approach reinforces their capacity to tackle a broad spectrum of tasks, from language comprehension to visual assessment. The widespread utilization of earlier Gemma models, coupled with a dynamic community that has previously developed various iterations, emphasizes the practical worth and dependability of this methodology.

Conclusion: A Considered Approach to Open, Accessible AI

Gemma 3 signifies a prudent movement towards enhancing the accessibility of cutting-edge AI. Available in four sizes and capable of processing both textual and visual data in more than 140 languages, these models provide an expanded context window and are fine-tuned for efficiency on commonplace hardware. Their design underscores a balanced methodology—providing robust performance while incorporating provisions to ensure safe utilization.

Ultimately, Gemma 3 serves as a pragmatic solution to enduring dilemmas in AI deployment. It enables developers to weave sophisticated language and visual capabilities into various applications, all the while prioritizing accessibility, dependability, and responsible practices.

Explore the Models on Hugging Face and Technical specifications. All recognition for this research belongs to the project researchers. Also, don’t hesitate to follow us on Twitter, and make sure to join our 80k+ ML SubReddit.

🚨 Introduce Parlant: An LLM-first conversational AI framework crafted to give developers control and precision over their AI customer service agents, utilizing behavioral guidelines and real-time supervision. 🔧 🎛️ It operates through an easy-to-use CLI 📟 and native client SDKs in Python and TypeScript 📦.

Asif Razzaq serves as the CEO of Marktechpost Media Inc.. As an innovative entrepreneur and engineer, Asif is dedicated to utilizing the capabilities of Artificial Intelligence for the greater good. His latest project involves the establishment of an Artificial Intelligence Media Platform, Marktechpost, distinguished for its comprehensive insights on machine learning and deep learning developments that are both technically accurate and easily digestible for a broad audience. The platform enjoys more than 2 million views each month, showcasing its popularity among viewers.

Be the first to comment